Why an Operating System for Data?

The data world has been battling a persistent crisis, and it has only been exacerbated by the growing intensity of data owned and managed by organizations. Chaos has ensued for non-expert end users as data ecosystems progressively develop into complex and siloed systems with a continuous stream of point solutions added to the insane mix.

Complex infrastructures requiring consistent maintenance deflect most of the engineering talent from high-value operations, such as developing data applications that directly impact the business and ultimately enhance the ROI of data teams.

Inflexible and unstable, therefore, fragile data pipelines constrict data engineering teams as a bottleneck for even simple data operations. It is not uncommon to hear a whole new data pipeline being spawned to answer one specific business question or 1000K data warehouse tables being created from 6K source tables.

Data Consumers suffer from unreliable data quality, Data Producers suffer from duplicated efforts to produce data for ambiguous objectives, and Data Engineers suffer from flooding requests from both data production and consumption sides.

The dearth of exemplary developer experience also robs data developers of the ability to declaratively manage resources, secure and compliant environments, and incoming requests to focus completely on data solutions. Due to these diversions and the lack of a unified platform, it is nearly impossible for DEs to build short and crisp data-to-insight roadmaps.

Traditional Data Stack (TDS) → Modern Data Stack (MDS)

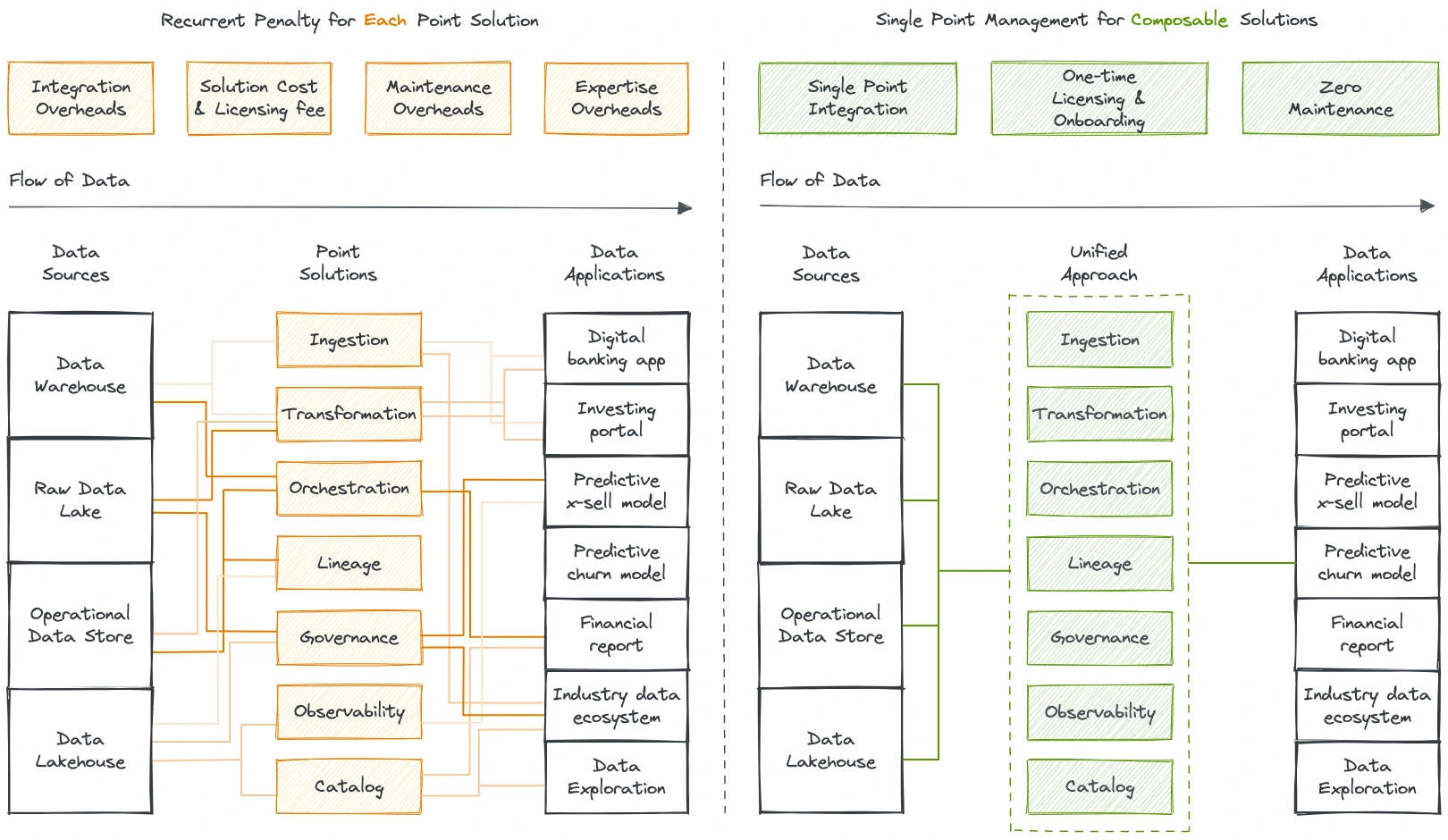

The concept of the modern data stack emerged over time to solve these common problems that infested the data community at large. Targeting patches of the problem led to a disconnected basket of solutions ending up with fragile data pipelines and dumping all data to a central lake that eventually created unmanageable data swamps across industries. This augmented the problem by adding the cognitive load of a plethora of tooling that had to be integrated and managed separately through expensive resources and experts.

Data swamps are no better than physical files in the basement- clogged with rich, useful, yet dormant data that businesses are unable to operationalise due to isolated and untrustworthy semantics. Semantic untrustworthiness stems from a chaotic clutter of MDS, overwhelmed with tools, integrations, and unstable pipelines. Another level of semantics is required to understand the low-level semantics, complicating the problem further.

Modern Data Stack (MDS) → Data First Stack (DFS)

The true value of MDS cannot be neglected. Its biggest achievement has perhaps been the revolutionary shift to the cloud, which has made data not just more accessible but also recoverable.

However, the requirement for a Data First Stack was felt largely due to the overwhelming build-up of cruft within the bounds of data engineering. The Data-First Stack (DFS) came up with a unification approach or an umbrella solution that targeted the weak fragments of TDS and MDS as a whole instead of proponing their patchwork philosophy.

DFS, or the Data Operating System (OS), brings together a curated set of self-service layers that eliminate redundant tools and processes to enable a reusable, modular, and composable operating platform, elevating user productivity. Now, instead of grinding to integrate hundreds of scattered solutions, users can put data first and focus on the core objectives: Building Data Applications that directly uplift business outcomes.

The Data-First Stack is essentially an Operating System design. Typically, an OS is a program that manages all programs necessary for the end user to experience the service of outcome-driven programs instead of users figuring out ‘how’ to run those programs. Most of us have experienced OS on our laptops, phones, and, in fact, on any interface-driven device. Users are hooked to these systems because they are abstracted from the pains of booting, maintaining, and running the low-level nuances of day-to-day applications that directly bring them value.

The Data Operating System is, thus, relevant to both data-savvy and data-naive organizations. In summary, it enables a self-serve data infra by abstracting users from the procedural complexities of applications and declaratively serves the outcomes.

Data Operating System Vision

The Data Operating System (DOS) is a Unified Architecture Design to abstract complex and distributed subsystems and offer a consistent outcome-first experience for non-expert end users.

A Data Operating System can be thought of as an internal developer platform for data engineers and data scientists. Just as an internal developer platform provides a set of tools and services to help developers build and deploy applications more easily, an internal data platform provides a set of tools and services to help data professionals manage and analyze data more effectively.

An internal data platform typically includes tools for data integration, processing, storage, and analysis, as well as governance and security features to ensure that data is managed, compliant and secure. The platform may also provide a set of APIs and SDKs to enable developers to build custom applications and services on top of the platform.

In analogy to the internal developer platform, an internal data platform is designed to provide data professionals with a set of building blocks that they can use to build data products and services more quickly and efficiently. By providing a unified and standardized platform for managing data, an internal data platform can help organizations make better use of their data assets and drive business value.

DOS is a milestone innovation in the data space, born out of need rather than out of the whim of a few geeks. Undeniably, geeks were definitely involved, and they built the foundation and envisioned the objectives for DOS. This, of course, resulted in DOS becoming an open technology for the community to explore and enhance – the geek’s favourite kind. The vision of the current state of DOS can be grouped into four pillars:

Transition from Maintenance-First to Data-First

The Data Operating System is the data stack that puts data first. As obvious as this may seem, this is contrary to the prevalent practices. Poor data engineers are currently stuck in a maintenance-first economy. This involves countless hours spent on solving infrastructure drawbacks and maintaining data pipelines.

DOS abstracts all the nuances of low-level data management, which otherwise suck out most of the data developer’s active hours. A declaratively managed system drastically eliminates the scope of fragility and surfaces RCA lenses on demand, consequently optimising resources and ROI. This enables engineering talent to dedicate their time and resources to data and building data applications that directly impact the business.

Faster deployment of data applications

Decoupling services is a huge accelerator in terms of speed. The API-first ideology of DOS enables modularization and interoperability with both native and external components ensuring quicker deployments and rollback abilities. With central management and seamless discovery of open APIs, users can augment and manipulate the data programmatically to dramatically enhance the speed of data operations.

Data developers can quickly deploy workloads by eliminating configuration drifts and vast number of config files through standard base configurations that do not require environment-specific variables. The system auto-generates manifest files for apps, enabling CRUD ops, execution, and meta storage on top. In short, DOS provides workload-centric development where data developers declare workload requirements, and DOS provides resources and resolves the dependencies. The impact is instantly realised with a visible increase in deployment frequency.

Freedom to innovate without collateral damage from failures

It is no secret that developers love to innovate and experiment. However, the cost of innovation has not always been in their favour, especially in the data space, which subsumes huge resources. But freedom to innovate fast and fail fast is the enabler of high ROI teams. DOS takes the heat of innovation and also saves time spent on experimentation through smart capabilities.

Some of these include intelligent data movement, semantic playgrounds, rollback abilities across pipelines and data assets, and declarative transformations where users can specify the inputs and outputs. DOS will automatically generate the necessary code to run the experiments. Data developers can declaratively prep, deploy, and de-provision clusters, provision databases and sidecar proxies, and manage secrets for lifetime with one-time credential updates- all through the simple and common syntax of DSL.

SOTA data developer experience

Data Engineers are the victim of the current data ecosystem, and that is validated by a recent study which reports 97% of data engineers suffering from burnout. The prevalent data stacks compel data engineers to work repeatedly on fixing fragile fragments of countless data pipelines spawned at the rate of business queries.

DOS enables a seamless experience for data developers by abstracting away repetitive and complex maintenance and integration overheads while allowing familiar CLI interfaces to programmatically speak to qualified data and build applications that directly impact business decisions and ROI. Developer experience is also significantly improved through the standardisation of key elements such as semantics, metrics, ontology, and taxonomy through consistent template prompts. All operations and meta details are auto-logged by the system to reduce process overheads.

Qualitative Impact of DOS

The Data Operating System (DOS) enables self-service for a broad band of data personas, including data engineers, business users, and domain teams. DOS allows a direct and asynchronous interface between data developers and data, eliminating complex layers of the infrastructure. Data developers can quickly spawn new applications and rapidly deploy to multiple target environments or namespaces with configuration templates, abstracted credential management, and declarative workload specifications. Containerised applications are consistently monitored and continuously tested for high uptime.

Democratised data inherently enable the organisation to shift accountability for data closer to the source. As a consequence of abstracted interfaces to understand and operate data better, data producers gain back control over the data they produce instead of having to go through centralised data engineering teams over multiple iterations to establish accurate business data models. The system connects to all data source systems and allows data producers to augment it with business semantics to make data products addressable.

DOS also eliminates integration overheads associated with maintaining multiple point solutions by using declaratively operable basic building blocks or primitives. With more freed-up resources, clear policy and quality directives, and local data ownership, governance and observability become key considerations instead of afterthoughts.

Quantitative Impact of DOS

The impact of DOS is observable within weeks instead of months or years through directly measurable metrics. DOS significantly cuts down the time to ROI (TTROI), which concerns sub-metrics such as time to insight, time to action, and time to deployment.

The total cost of data product ownership is narrowed down enough to enable the development of multiple data products within the same band of available resources. Metrics specifically used in high-innovation and, consequently, high-performance environments, such as hours saved per data developer, cost of failed experiments and time to recovery, are all brought down to show visible business impact.

Implementation Cornerstones to Execute the DOS Vision

Artefact first, CLI second, GUI last

DOS is built to make life easy for Data Developers. With the DOS Command Line Interface (CLI), data developers have a familiar environment to run experiments and develop their code. The artefact-first approach puts data at the centre of the platform and provides a flexible infrastructure with a suite of loosely coupled and tightly integrable primitives that are highly interoperable. Data developers can use DOS to streamline complex workflows with ease, taking advantage of its modular and scalable design.

Being artefact-first with open standards, DOS can also be used as an architectural layer on top of any existing data infrastructure and enable it to interact with heterogenous components, both native and external to DOS. Thus, organizations can integrate their existing data infrastructure with new and innovative technologies without having to completely overhaul their existing systems.

It’s a complete self-service interface for developers where they can declaratively manage resources through APIs, CLIs, and even GUIs if it strikes their fancy. The intuitive GUIs allow the developers to visualise resource allocation and streamline the resource management process. This can lead to significant time savings and enhanced productivity for the developers, who can easily manage the resources without needing extensive technical knowledge. GUIs also allow business users to directly integrate business logic into data models for seamless collaboration.

Inherent DataOps

The DOS ecosystem automatically follows DataOps standards as a consequence of its unified and declarative architecture. Decoupled components and layers enable the agile development process with continuous integration and continuous deployment for data applications through highly collaborative interfaces that cut down unnecessary iterations. Data developers can better respond to changing business needs with auto-integration and auto-deployment while also reducing the risk of downtime with alert-triggered rollbacks. To be more preventive, pipelines can also be configured to auto-trigger on completeness of quality expectations instead of on a timely basis.

DOS is highly fault-tolerant with no central point of failure and flexibility to spin up hotfix environments, allowing for maximum uptime and high availability. Data Developers can create and modify namespaces in isolated clusters, create and execute manifest files, and independently provision storage, compute, and other DOS resources for self-service development environments. Real-time debugging in both dev and prod implementations enables rapid identification and resolution of issues before they become problems.

Multi-hop rollbacks also provide an added layer of protection against unexpected issues, allowing for rapid restoration of previous configurations in the event of a problem. Finally, declarative governance and quality, in tandem with pre-defined SLAs, make DataOps a seamless and integrated part of the larger data management workflow and ensure that dataOps is no longer an afterthought but a core component of the data management approach.

Data Infrastructure as a Service (DIaaS)

DOS is synonymous with a programmable data platform which encapsulates the low-level data infrastructure and enables data developers to shift from build mode to operate mode. DOS achieves this through infrastructure-as-code (IaC) capabilities to create and deploy config files or new resources and decide how to provision, deploy, and manage them. For instance, provisioning RDS through IaC. Data developers could, therefore, declaratively control data pipelines through a single workload specification or single-point management.

DIaaS also enables the decoupling of physical and logical data layers, ensuring complete democratisation for both the business and engineering sides. Business and domain users integrate business logic without interfering with physical assets. Data engineers can use business models for data mapping instead of struggling with partial business views, reducing unnecessary iterations.

This approach consequently results in cruft (debt) depletion since data pipelines are declaratively managed with minimal manual intervention, allowing resource scaling, elasticity, and speed (TTROI). The infrastructure also fulfils higher-order operations such as data governance, observability, and metadata management based on declarative requirements.

In summary, DIaaS enables the three components needed to deploy the architectural data product quantum: Data as Code, Self-Service Data Infrastructure, and context-aware Data & Metadata.

Data-as-a-Software

In prevalent data stacks, we do not just suffer from data silos; there’s a much deeper problem. We also suffer tremendously from data code silos. DOS approaches data as software and wraps code systematically to enable object-oriented capabilities such as abstraction, encapsulation, modularity, inheritance, and polymorphism across all the components of the data stack. As an architectural quantum, the code becomes a part of the independent unit that is served as a Data Product.

DOS implements infrastructure as code to manage and provision low-level components with state-of-the-art data developer experience. Data developers can customize high-level abstractions on a need basis, use lean CLI integrations they are familiar with, and overall experience software-like robustness through importable code, prompting interfaces with smart recommendations, and on-the-fly debugging capabilities.

CRUD ops on data and data artefacts, such as creation, deletion, and updates, follow the software development lifecycle undergoing review cycles, continuous testing, quality assurance, post-deployment monitoring and end-to-end observability. This enables reusability and extensibility of infra artefacts and data assets, enabling the data product paradigm.

Data as a product is a subset of the data-as-a-software paradigm wherein data inherently behaves like a product when managed like a software product, and the system is able to serve data products on the fly. This approach enables high clarity into downstream and upstream impacts along with the flexibility to zoom in and modify modular components.

Architectural Pillars of DOS

Multilayered Kernel Architecture

DOS is enabled through a multilayered kernel architecture that allows dedicated platform engineering teams to operate the system’s core primitives without affecting the business or application user’s day-to-day operations. Like the kernel of any operating system, it facilitates communications between users and primitives of the data operating system on the one hand and the binary world of the machines on the other. Each layer is an abstraction that translates low level-APIs into high level-APIs for usage by the different layers, components, or users of a Data Operating System. The kernels can logically be separated into three layers.

The layered architecture promotes a unified experience as opposed to the complexity overheads of a microservices architecture imposed by the modern data stack. While the microservices architecture has some benefits, it also comes with performance, maintenance, security, and expertise overheads that can ultimately cost the organisation low ROI on data teams and high time to ROI for data applications. On the other hand, the layered approach promotes a loosely coupled yet tightly integrated set of components to disband the disadvantages of the modern data stack.

Cloud Kernel

Cloud Kernel makes it possible for a Data Operating System to work with multiple cloud platforms without requiring specific integrations for each one. The Data Operating System uses several custom-built operators to abstract the VMs and network systems provisioned by the cloud provider. This allows users to not worry about the underlying protocols of the cloud provider and only communicate with the high-level APIs provided by the cloud kernel, making DOS truly cloud-agnostic.

Core Kernel

Core Kernel provides another degree of abstraction by further translating the APIs of the cloud kernel into higher-order functions. From a user’s perspective, the core kernel is where the activities like resource allocation, orchestration of primitives, scheduling, and database management occur. The cluster and compute that you need to carry out processes are declared here; the core kernel then communicates with the cloud kernel on your behalf to provision the requisite VMs or node pools and pods.

User Kernel

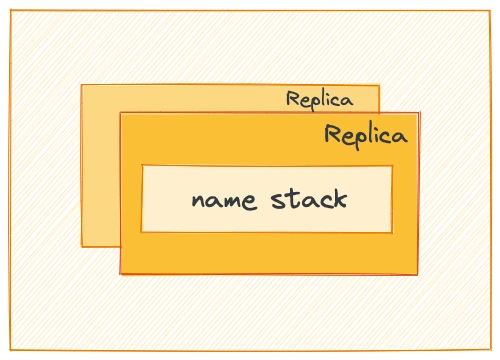

User kernel is the third layer of abstraction over the APIs of the core and cloud kernels. The secondary extension points and programming paradigms of the Data Operating System, like the various Stacks and certain Primitives, can be envisioned to be working at this level. While you can directly communicate with the cloud kernel APIs, as a user of the Data Operating System, you can choose to rather work with the core and user kernels alone. The core kernel is where users work with essential features like Security, Metadata Management, and Resource Orchestration. In contrast, the user kernel can be thought of as the layer where the users have complete flexibility in terms of which components or primitives they want to leverage and which they do not require.

DOS Primitives

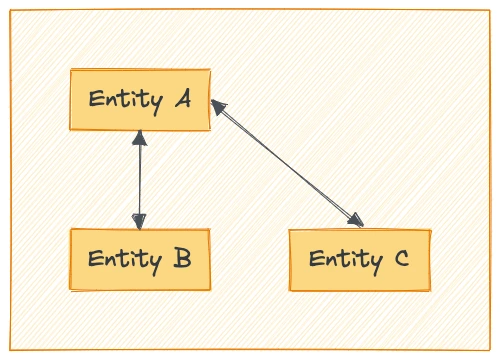

Becoming data-first within weeks is possible through the high internal quality of the composable Data Operating System architecture: Unification through Modularisation. Modularisation is possible through a finite set of primitives that have been uniquely identified as essential to the data ecosystem. These primitives are distributed across the kernel layers to enable first, second, and multi-degree components that facilitate low-level operations and complex use-case-specific applications.

The Data Operating System, technically defined, is a finite set of unique primitives that talk to each other to declaratively enable any and every operation that data users, generators, or operators require. Like a common set of core building blocks or lego pieces that can be put together to construct anything, be it a house, a car, or any object. DOS core primitives can similarly be put together to construct or deconstruct any data application that helps data developers serve and improve actual business value instead of wasting their time and efforts on managing the complex processes behind those outcomes.

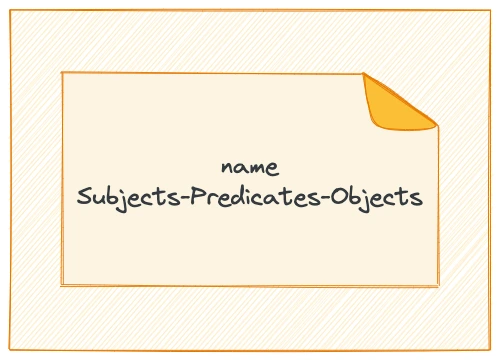

Primitives are atomic and logical units with their own life cycle, which can be composed together and also with other components and stacks to act as the building block of the Data Operating System. They can be treated as artefacts that could be source-controlled and managed using a version control system. Every Primitive can be thought of as an abstraction that allows you to enumerate specific goals and outcomes in a declarative manner instead of the arduous process of defining ‘how to reach those outcomes’.

Workflow

Workflow is a manifestation of DAGs that streamlines and automates big data tasks. DOS uses it for managing both batch and streaming data ingestion and processing.

Service

Service is a long-running process that is receiving and/or serving APIs for real-time data, such as event processing and stock trades. It gathers, processes, and scrutinizes streaming data for quick reactions.

Policy

Policy regulate behaviour in a Data Operating System. Access policies control individuals, while data policies control the rules for data integrity, security, quality, and use during its lifecycle and state change.

Depot

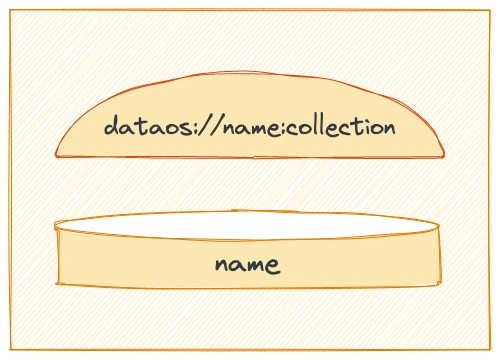

Depot offer a uniform way to connect to various data sources, simplifying the process by abstracting the various protocols and complexities of the source systems into a common taxonomy and route.

Model

Model is a semantic data model to enable specific use cases, providing rich business context and a common glossary to the end user. They act as an ontological and taxonomical layer above contracts and physical data.

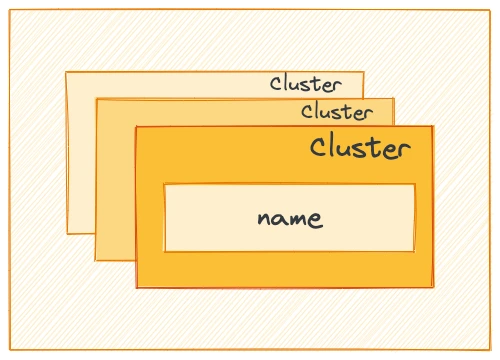

Cluster

Cluster is a collection of computation resources and configurations on which you run data engineering, data science, and analytics workloads. A Cluster in DOS is provisioned for exploratory, querying, and ad-hoc analytics workloads.

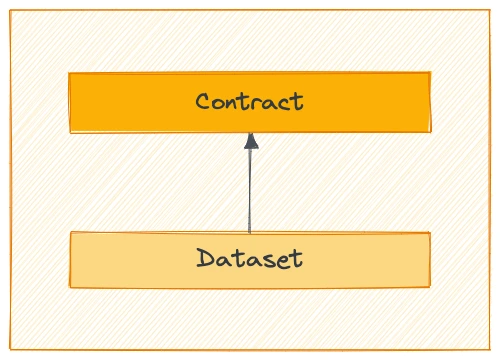

Contract

Contract is an agreement between data producers and consumers to ensure data expectations are met. Expectations may include shape, semantics, data quality, or security. Contract can be enabled by any primitive in DOS.

Secret

Secret store sensitive information like passwords, tokens, or keys. Users access it with a ‘Secret’ instead of using sensitive information. This lets you monitor and control access while reducing data leak risks.

Database

Database primitive is for the use cases where output is saved in a specific format. This primitive can be used in all the scenarios where you need to syndicate structured data. Once you create a Database, you can put a depot & service on top of it to serve data instantly.

Compute

Compute resources can be thought of as the processing power required by any workflow/service or query workload to carry out tasks. Compute is related to common server components, such as CPUs and RAM. So a physical server within a cluster would be considered a compute resource, as it may have multiple CPUs and gigabytes of RAM.

Central Control, Multi-Cloud Data Plane

The kernel layers and the primitive quantum of DOS allow users to operationalise DOS into a dual-plane conceptual architecture where the control is forked between one central plane for core global components and one or more data planes for localised operations.

Control & Data plane separation decouples the governance and execution of data applications. This gives the flexibility to run the operating system for data in a hybrid environment and deploy a multi-tenant data architecture. Organizations can manage cloud storage and compute instances with a centralised control plane.

Control Plane

The Control Plane helps admins govern the data ecosystem through centralised management and control of vertical components.

- Policy-based and purpose-driven access control of various touchpoints in cloud-native environments, with precedence to local ownership.

- Orchestrate data workloads, compute cluster life-cycle management, and version control of a Data Operating System’s resources.

- Manage metadata of different types of data assets.

Data Plane

The Data Plane helps data developers to deploy, manage and scale data products.

- The distributed SQL query engine works with data federated across different source systems.

- Declarative stack to ingest, process, and extensively syndicate data.

- Complex event processing engine for stateful computations over data streams.

- Declarative DevOps SDK to publish data apps in production.

DOS as an enabler for Data Design Architectures

DOS, therefore, powers very specific data design architectures and data applications with just a few degrees of rearrangement. This also makes way for curating self-service layers that abstract users from the complexities of siloed and isolated subsystems so they can focus on the problem at hand – building data products and applications.