Implementing Design Architectures with DOS: Data Mesh

What is Data Mesh?

Data Mesh is less technology and more organizational and mindset transformation. Simply put, it is a collaboration solution to improve data operationalization across realistic teams and domain structures in organizations.

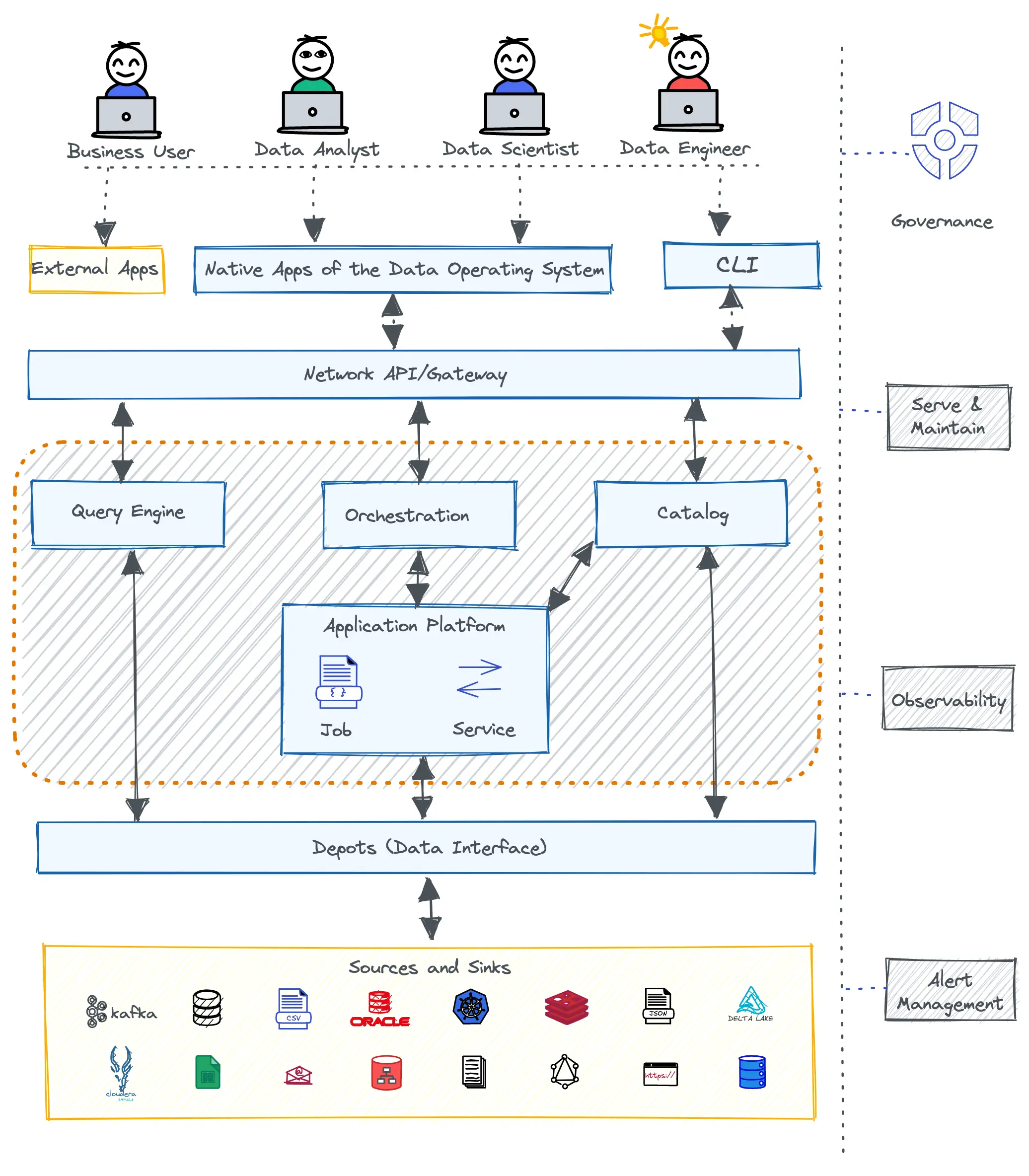

🤖 Self-Serve Data Infrastructure Platform:

Data Mesh advocates a data developer platform that abstracts complex and siloed systems and exposes curated self-service layers for a broad band of data users. The platform enables dedicated teams to handle the cognitive burden of complex subsystems, redundant tools, and countless services.

💠 Domain Ownership:

Data Mesh encourages distributed ownership and responsibility of data. Data should be produced, owned, and managed by domain teams instead of dumping the entire responsibility to central engineering teams that are already flooded with too many requests.

🔐 Federated Governance:

Governance, when it comes to data, is extremely challenging due to rapidly changing standards, undefined ownership, and the dynamic state of data. Federated instead of centralized governance distributes the responsibility and identifies data owners for accountability, keeping pace with dynamic requirements.

📦 Data as a Product:

Data Mesh’s ultimate goal is to create or enable data products through the decentralized data ecosystem design. Productizing the concept of data means having an outcome-first mindset where data adds business value for the end-user instead of existing in a state of dormancy and consuming more business resources than delivering.

The Product Mindset

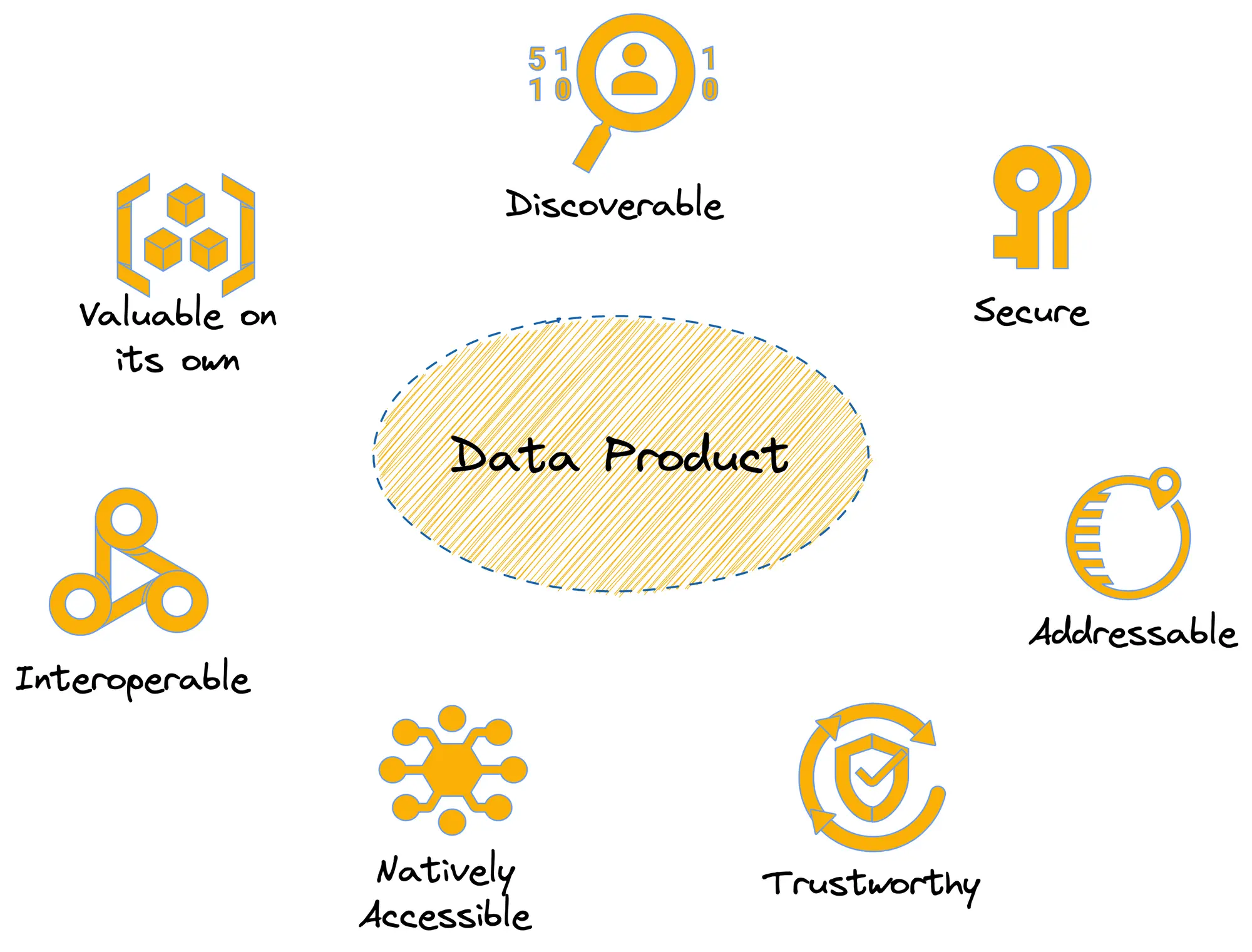

To successfully implement the data mesh design, it is important to understand the concept of Data Products. Simply put, a Data Product is a unit of data that adds value to the end user consistently and reliably. A data product primarily has seven key attributes that DOS enables as below:

| Data Product Attribute | Attribute Description | DOS Implementation |

| Addressable | Is available as a standard asset across cross-functional, regional, and multi-cloud environments. | Addressable data is achieved through metadata democratization instead of exposing data itself across risky endpoints. DOS enables complete metadata visibility and manages it through a central control plane while enabling governed access to data. |

| Discoverable | Is seamlessly searchable across heterogeneous source systems, resources, pipelines, and activation endpoints. | Data discoverability is one of the pillars of data operationalization and is a challenging problem for current data stacks due to isolated catalogs (point solutions). A DOS implementation has visibility over the entire data landscape, enabling true data discoverability along with optional smart capabilities. |

| Interoperable | Data assets, processes, and components easily talk to each other through common interfaces or languages. | DOS is built on tightly coupled and loosely integrated primitives which constantly talk to each other within the unified architecture. It is also possible to extend interoperability to non-native components outside the operating system through common APIs. |

| Natively Accessible | Language, format, personnel, and system-agnostic accessibility. | DOS exposes a common domain-specific language (DSL) to navigate the low-level subsystems. It also understands a whole range of programming and SQL languages and can adopt new ones through native transpilers. |

| Secure | Governance through access and masking policies, attribute-based access control, encryption capabilities, and row and column-level security controls. | End-to-end governance is enabled through the DOS central control plane that acts as a policy decision point and orchestrates access and data policies across policy enforcement points in the DOS user plane. |

| Trustworthy | Meets quality expectations, compliant with required standards, and is laced with end-to-end lineage and provenance particulars. | The primary outcome of DOS is data that inherently meets quality, governance, and semantic expectations set as per business requirements. This is achieved through right-to-left data modeling and contractual handshakes at multiple points in the DOS landscape. |

| Valuable on its own | A complete and independent entity that could directly impact business decisions. | DOS surfaces up reliable and independent data entities that act as data objects and can be operationalized for multiple use cases, including model consumption, analytics requirements, domain-specific operations, or cross-functional use cases. |

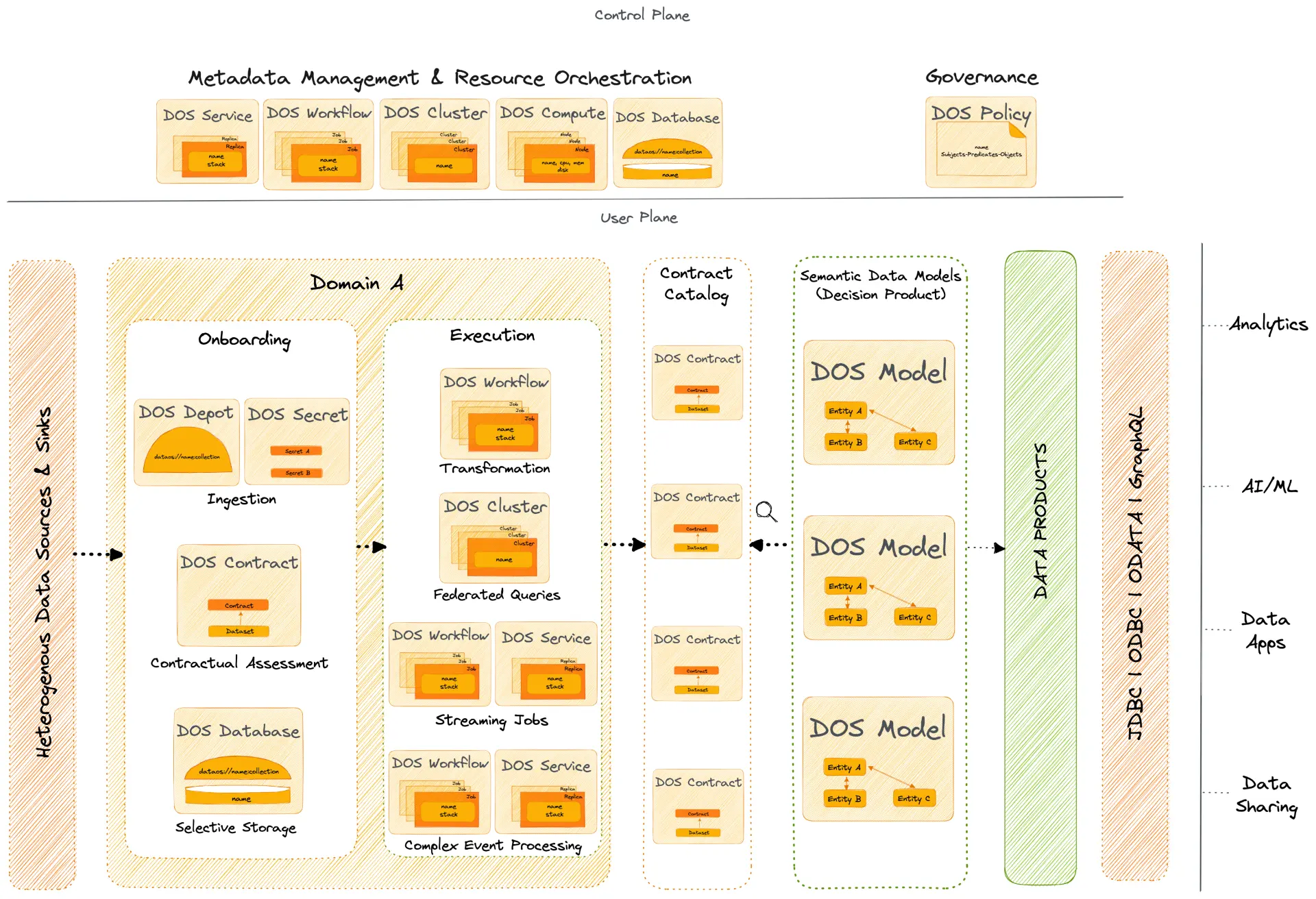

The Data Mesh Design Architecture as operationalized through a Data Operating System

Unified Architecture with decoupled control and user planes, domain segregation, and decouple logical and physical layers.

Onboarding

Ingestion

DOS takes care of ingestion through the depot construct and governs entry points through the secrets. All one needs to do is enter the credentials of any source system one time and DOS will take care of governing that entrance indefinitely based on access and masking policies managed by the central control plane.

Storage

DOS supports intelligent data movement, which means cutting down unnecessary, expensive, and time-consuming migration of the entire data. With contractual filters, only data that adhere to the business expectations are channeled and transformed accordingly.

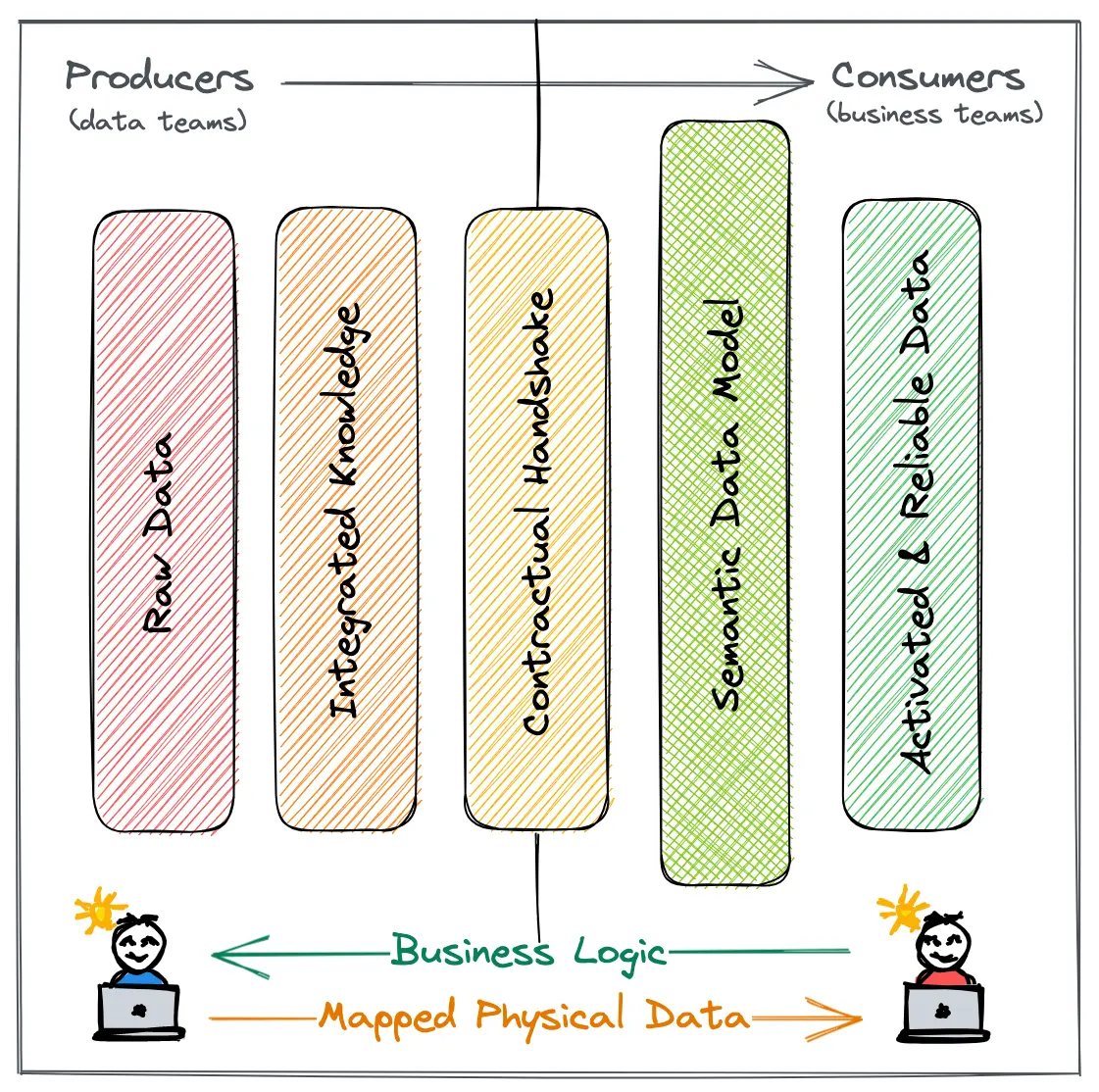

Conceptual Illustration of Right-to-Left Data Modeling

Even if we assume the contract primitive is absent, right-to-left data modeling supported by the DOS model primitive enables businesses to define requirements even before the data is available in physical systems. This gives control of data back to the business where business teams can assert key data requirements instead of business use cases getting molded by whatever data is at hand.

Once the requirements are in place, Data Engineers only need to map the data sources to channel specific slices of data that adds value to domain and business teams. Adding contract to the mix enables the channeled data to be declaratively managed for semantics, quality, and governance.

Execution

Transformation

Writing transformation jobs can be complex and requires special expertise. DOS abstracts this complexity with intuitive wrappers through which users only need to declare the input, output, and output configs. The actual transformation jobs are self-built and executed at a low level in sync with contractual expectations defined for ingested data on the production side.

Federated queries

DOS supports common SQL interfaces that can penetrate across heterogeneous source systems and query data as if from a single data source. There is no data movement involved in this entire transaction, and governance policies are applied on the fly as data is queried from across multiple sources. Each model query is optimised for the grammar of the source system it queries to achieve high query performance and minimize query expense.

Streaming data and Complex Event Processing

DOS supports out-of-the-box stacks for processing stream events declaratively. These stacks enable stateless extraction and transformation of streaming data on the fly with no dependencies on runtime or payload. Streaming services are also centrally orchestrated and governed.

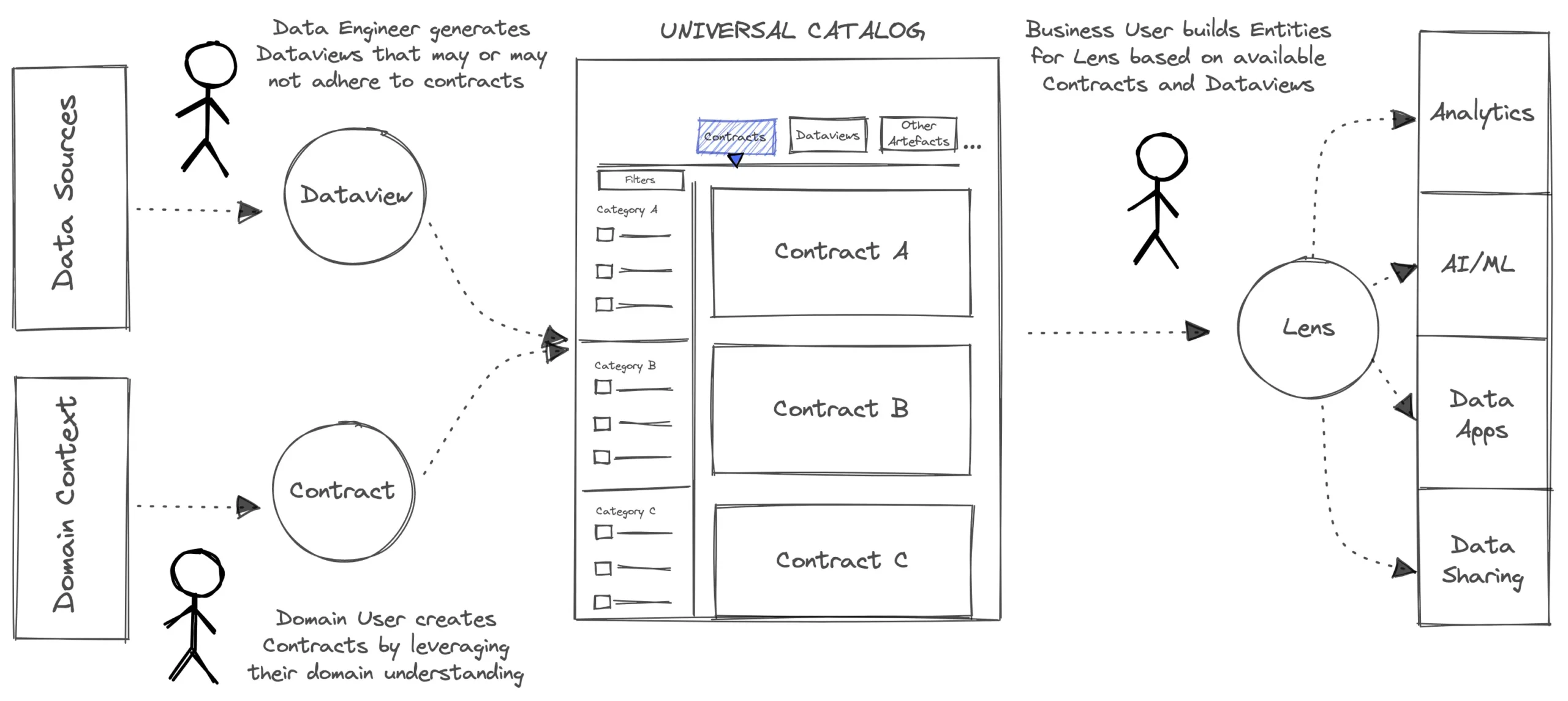

Contract Catalogs

DOS enables a smart contract catalog embedded with NLP capabilities to ensure that contracts do not have the fate of becoming yet another unmanageable data asset. The contract catalog is an ideal enabler of the data mesh ideology since it pragmatically distributes ownership across the organization’s structure.

A data operating system, by nature, has visibility across the entire data ecosystem and is, thus, able to create a thorough contract catalog that interweaves with the day-to-day activities of domain teams, business teams, and developer teams.

Contracts are ideally defined, owned, and channeled into catalogs by domain teams who have a thorough understanding of the business landscape. The data engineering teams create dataviews or temporary data tables that serve specific business requests.

Ideally, dataview generation should adhere to contract expectations, but data engineers should not feel restricted by such an imposition. Instead, they can choose to adhere to existing contracts from the catalog to ensure the number of requests from producers and consumers is significantly cut down and the dataviews become backward compatible.

Finally, the business teams working on specific use cases source dataviews that specifically adhere to contracts that match the business’ expectations and power data models to activate data that is now rich quality-wise, compatible with governance standards, and follows universal semantics.

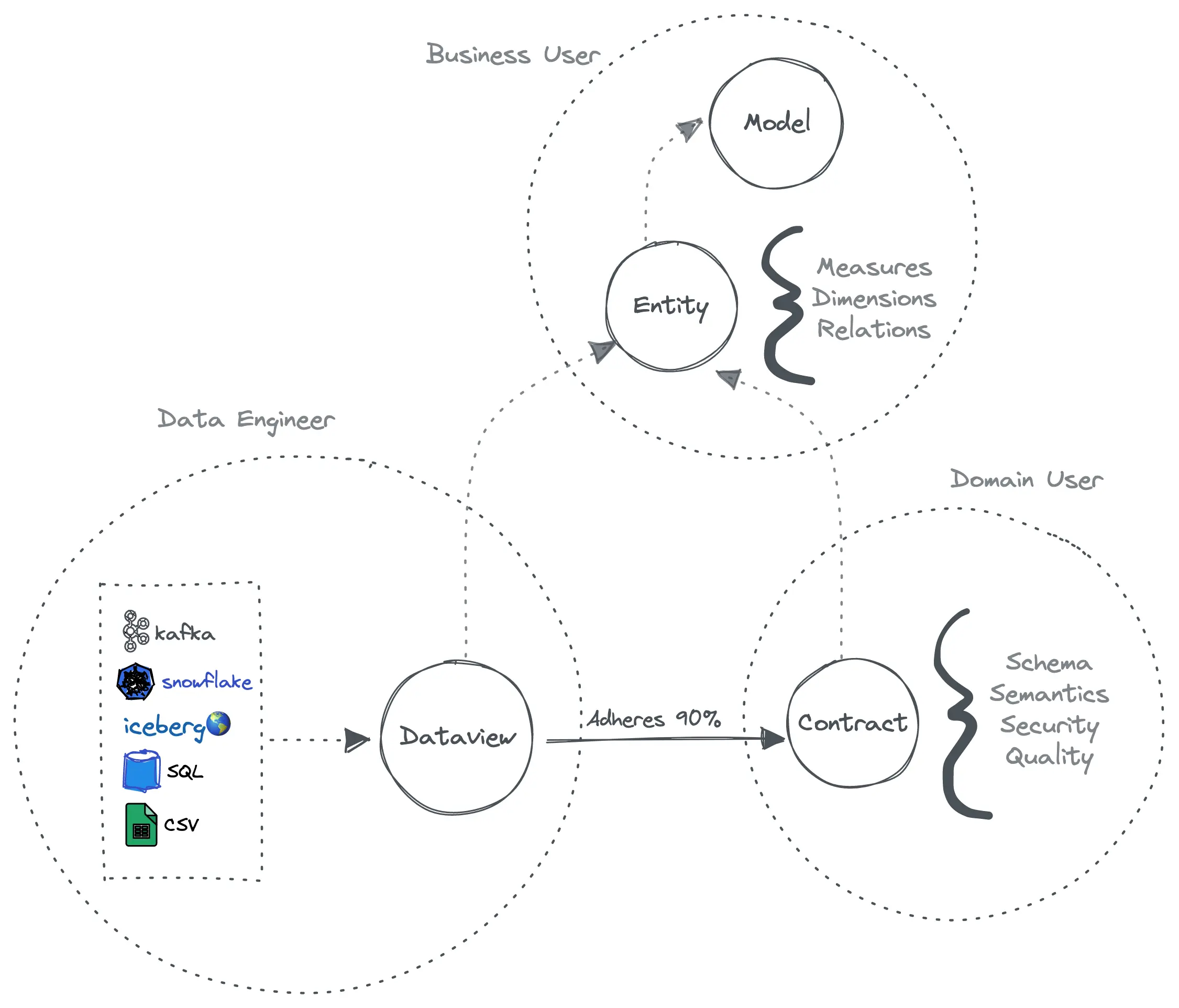

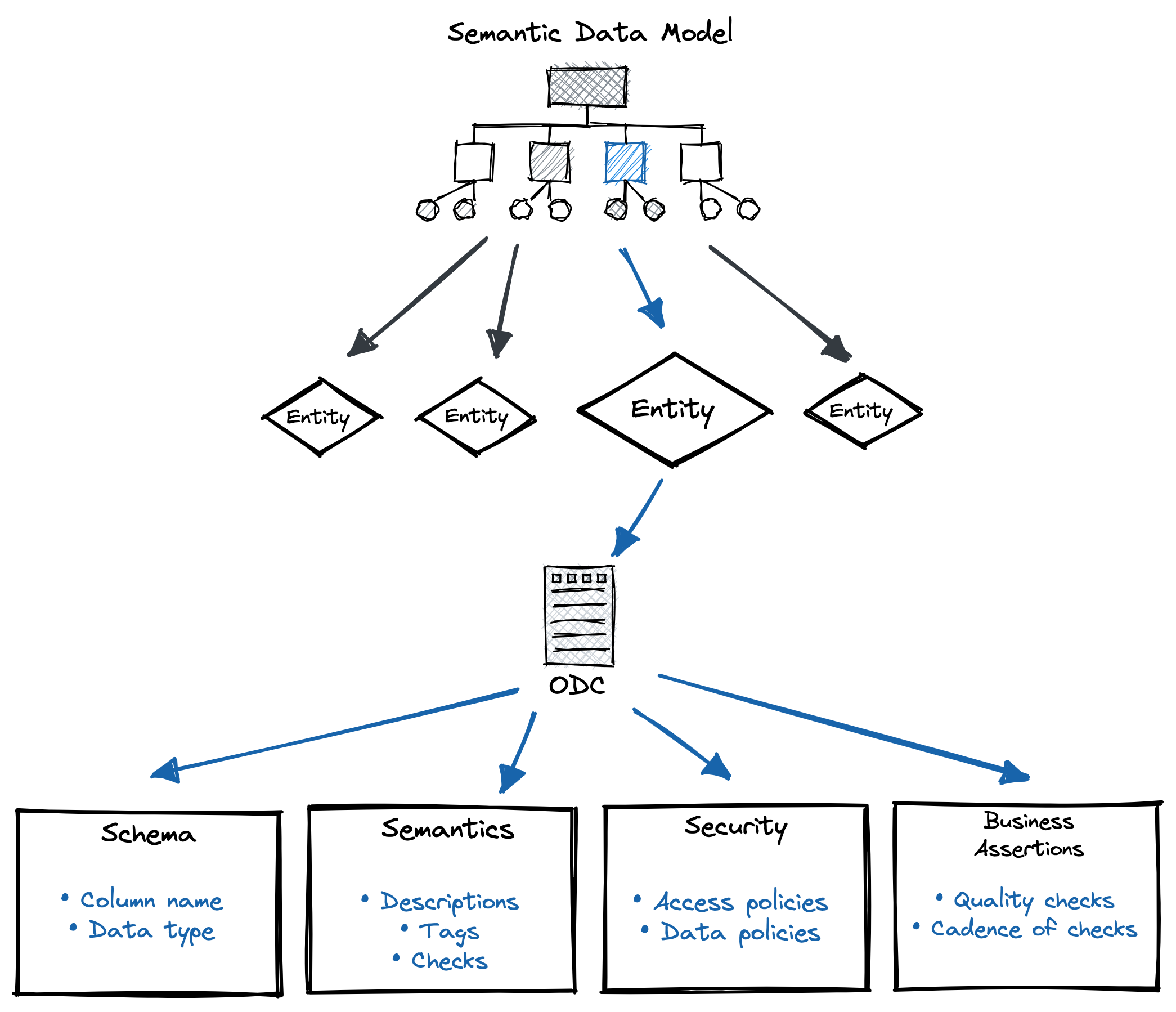

Semantic Data Models

A semantic data model can be considered a decision product (a unit used to power decisions with data). Users have complete flexibility to use any type of decision product in the logical layer or on top of it. Semantic models are typically owned by business users who work directly with business use cases. They leverage cross-functional data generated and curated by domain and developer teams.

Models refer to contracts to channel entities that serve data according to the pre-defined business expectations (shape, semantics, security, and use case assertions). Entities are business objects such as, say, Customers, Orders, Transactions, etc.

Once a pool of high-quality entities is established, it can be used to power data models that define dimensions, relationships, and a metrics layer. Defining the metrics layer at a level lower than that of dashboarding tools (as is regular practice) is key to democratizing data models and cutting off lock-ins and model silos.

Models at this layer could now be directly operationalized to work with applications on the activation layer, such as AI/ML, analytics, and data-sharing use cases. These models can also easily be imported to higher-level analysis or exploratory tools or connected to DOS’ native dashboards to analyze and build business reports on the fly.

In conclusion, a data operating system supports all the pillars of a data mesh and more. Distilling the above information, below is a summary of how DOS enables the four key roles of a data mesh design architecture.

🤖 Self-Serve Data Infrastructure Platform:

It is characteristic of any Operating System architecture to act as an abstraction layer that hides complex subsystems while enabling a wide range of users to self-serve required capabilities. For instance, DOS enables complete flexibility for data developers while ensuring they have minimal need to exercise that flexibility. Starting right from onboarding data and executing operations on top of it, DOS manages all the low-level operations declaratively, yet allowing the data developer to get into the nitty-gritty whenever necessary.

Similarly, DOS caters to the requirements of other data personnel, such as business or domain users, by providing more logical and GUI-based self-service layers that such users can leverage to inject their vast business knowledge into the data ecosystem and unburden data engineers from having to figure out the business glossary based on a partial view of countless business verticals.

💠 Domain Ownership:

The onboarding and execution functions fall under the physical layers of DOS and under the purview of data engineers. The Contract and Modeling layers on the right make up the logical layers and are decoupled from the physical layers to ensure federated ownership and governance for a data mesh design.

Domain ownership is facilitated through careful segregation of responsibilities across teams. Such segregation is possible through an all-encompassing view and control of the organizational landscape. As we saw above, domain teams own contract definitions to define the utopian state of data, the data engineers own dataviews that strive to achieve the utopian state, and finally, the business teams own the data models that power very specific business use cases on top of combinations of minimalistic DOS primitives.

🔐 Federated Governance:

The DOS Control Plane enables three primary capabilities through a centralized pane: Metadata management, Resource Orchestration, and Governance. The governance component in the central cloud acts as a policy decision point and overlooks enforcement of access and masking (data) policies across policy enforcement points distributed across the DOS user plane.

DOS supports attribute-based access policies (ABAC), which is also a superset of role-based access policies (RBAC). While access policies work on the asset level, data (masking) policies can be enforced across the row and column levels.

📦 Data as a Product:

The ultimate goal of any data-related activity, be it collaboration, tooling, or process-related innovation, is to achieve a basket of data products. Businesses cannot use unreliable data to power decisions and require data that is trustworthy in terms of quality, governance, and semantics.

A data operating system has the required levers or components across physical and logical layers that work with raw data from heterogeneous source systems to enable data products at the activation layer. Any user tapping into the activation layer could now blindly rely on the integrity of the data that is of high quality and serves exact business needs.